ExaFLOW Project Helps Industry Exploit High Order CFD

Philipp Schlatter, Adam Peplinski, and Nicolas Offermans, Linné FLOW Centre and Swedish e-Science Research Centre (SeRC), KTH Mechanics, and Niclas Jansson, PDC

After three years of working on key algorithmic challenges in computational fluid dynamics (CFD), the eight consortium partners in the ExaFLOW Horizon 2020 FET (Future and Emerging Technologies) project reminisce here about what has been achieved during the lifetime of the project. With three flagship runs, ExaFLOW has managed to work on several specific CFD problems – the differences between the problems that ExaFLOW addressed serve to highlight the importance of the outcomes of the project for both industry and academia.

Fluid dynamics and turbulence are important topics for our everyday life: both from a technical/industrial point of view, and also when considering biological and geophysical systems. It turns out that a large fraction of our energy consumption is directly due to turbulent friction, that is, the dissipation of turbulent kinetic energy to heat caused by fluid viscosity. The study of turbulence is therefore an essential research discipline in today’s academic and industrial arenas, and has been referred to as the last unsolved problem of classical physics.

Obtaining numerical solutions to problems involving turbulent flows, which is an important aspect of CFD, is a prime contender for simulations aiming towards higher and higher levels of computational performance. This is mainly because, in turbulent systems, a large range of scales are active, and thus for high-speed vehicles – such as aeroplanes, cars and trains – there is virtually no limit to the necessary degrees of freedom. These active modes translate directly to the size of the system to be studied via numerical simulations. Therefore, CFD applications are particularly suited for extreme parallel scaling, in other words, to reach beyond today’s petascale systems towards exascale performance. Of course, these are challenging problems because, in turbulence, non-local interactions are important, which puts a heavy burden on communication between processors during the simulation.

In order to facilitate the use of accurate simulation models (that is, high-fidelity simulations) in exascale environments, ExaFLOW worked towards having three flagship runs near the end of the three-year project period. These flagship runs were designed with high industrial relevance in mind – addressing key innovation areas such as mesh adaptivity, resilience, power consumption and strong scalability. Each of the three flagship runs addressed specific CFD problems, thereby demonstrating the improvements made by ExaFLOW to their co-design applications: Nektar++, Nek5000, and OpenSBLI.

Flagship Run No. 1: Adaptive mesh refinement (AMR) of turbulent wall-bounded flow with Nek5000

A technique known as adaptive mesh refinement (AMR) was used to study the turbulent flow around a NACA 4412 wing section at a Reynolds number of Re = 200,000. (The NACA wing sections are mathematical models for shapes of aircraft wings that were developed to categorise the aerodynamic properties of different wing profiles, while keeping them simple and reproducible.) The framework that was used for the simulations was the Nek5000 code, which is based on the spectral element method (SEM). Using algorithms developed within ExaFLOW, the mesh that represented the wing section in the simulations could evolve dynamically depending on the estimated computational error at any given location in space and time: the simulation determined the optimal mesh on its own, thus making the design of an initial mesh much simpler. This means that the throughput time for a computational engineer (when using this approach to solve another problem of this ilk) is much faster than for other approaches used in earlier studies of this type of problem, while still complying with the specified error measures for the solution. Using adaptivity, the simulation time could be reduced by more than 50% compared to previous studies of the same case. In addition, the improved flexibility in mesh resolution makes it possible to significantly increase the domain size, while avoiding elements with high aspect ratios in the far-field. This, in turn, results in a lower sensitivity to boundary conditions and a better condition number for the numerical operators.

The selected error estimators for the simulations were based on the spectral properties of the discrete solution. They were shown to provide a good estimate of the local conservations of mass and momentum, while being cheap to compute.

The mesh refinement process was done via the so-called h-refinement technique, where the number of degrees of freedom can be increased locally by octree (3D) splitting of selected elements. Extending Nek5000 to incorporate such capabilities required substantial modifications in the code. In particular, these changes included the introduction of interpolation operators at the interface between fine and coarse elements, a new implementation of the preconditioners, and the use of the external libraries p4est and ParMetis for the management and partitioning of the grid, respectively.

As a result of this work the ExaFLOW partners are of the opinion that solution-aware adaptive simulation techniques will play a major role in future CFD, in particular for more complex flow situations where the physics is either not known a priori, or is changing quickly depending on outer circumstances, such as boundary conditions.

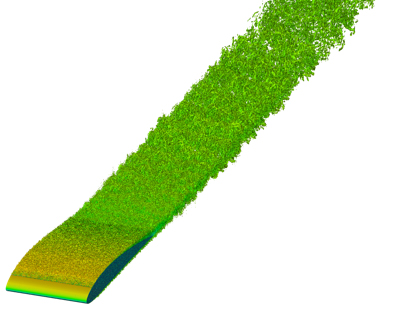

The figure above shows an instantaneous visualisation of the turbulent eddies (vortices) arising in the boundary layer around the wing profile. The flow is tripped at a short distance downstream of the leading edge, and then a spatially evolving pressure-gradient boundary layer develops. Downstream of the wing, a turbulent wake is established, which slowly decays due to viscous effects.

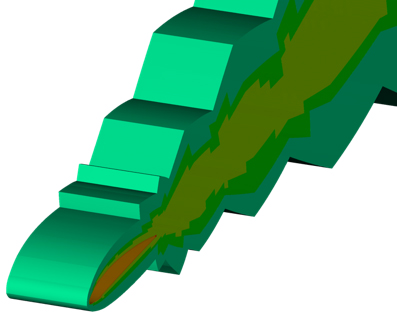

The figure above illustrates regions of the domain with refinement levels larger than 3, identified by different colours. It can clearly be seen that the highest resolution is required close to the wing surface, and in the centre region of the turbulent wake; these are the regions where the smallest eddies (and thus the highest local Reynolds number) are expected. These regions can dynamically adapt during the course of the simulation, in case, for example, the angle of attack is changed.

Flagship Run No. 2: Efficient code generation and error indicators with OpenSBLI

The second flagship run focused on a compressible version of the NACA4412 wing profile as the actual test case, and used the finite difference code OpenSBLI. This compressible flow solver is based on automatic code generation methods developed during the course of the ExaFLOW project. The code efficiency comes from the fact that C++ code is automatically generated for the underlying architecture using a high-level language to express finite difference stencil operations. In common with the first flagship run, OpenSBLI also relied on error indicators for rapid validation of grids containing more than billions of points for simulations that were subsequently run on Europe’s fastest supercomputers, showing near perfect weak scaling up to 95,000 cores, and strong scaling up to 50,000 cores.

Flagship Run No. 3: Turbulent flow around the Imperial Front Wing with Nektar++

Our final flagship run was based on the turbulent flow around a front wing of a Formula-1 racing car, a complex geometry where the challenges of high order meshing and solver robustness are evident. Utilising some of the developments that made it possible to do the first two runs in the ExaFLOW project, we were able to successfully run this geometry at the full experimental Reynolds number and with varying polynomial order in Nektar++ and compare it with state of the art finite volume large eddy simulation (LES) and time-resolved experimental data. This demonstrated significant progress in leveraging Nektar++ for the goal of moving high-order CFD from being an academic tool to an industrial one.

All the ExaFLOW partners will continue their efforts to improve the co-design applications with the aim of pushing the new algorithms to higher technology and readiness levels (TRLs) thereby making fast, accurate and resilient fluid dynamics simulations possible and accessible for both academic and industrial research and development at exascale.

For more information about ExaFLOW, visit www.exaflow-project.eu for links to all the reports and code developed by the project.