Research will lower data centres energy use

The energy consumption of data centres continue to rise and will soon reach twice the whole electric consumption of Sweden. Tom Barbette, Postdoc at Software and Computer Systems and his team has developed a technique to lower data centres energy use.

Hi Tom, please tell us more about your research.

"In the past years, data centres have grown like mushrooms. They are gigantic warehouses full of servers that run today's Internet services, like YouTube, Google, Facebook or Netflix. At the entry of those data centres are guardians, which dispatches the Internet traffic to hundreds of thousands of servers. Those so-called load-balancers have the essential task of keeping every server as busy as best as possible. If a server receives too much traffic, it will be "overcrowded", leading to jerky videos, or slow web pages' responsiveness. On the opposite, unloaded servers are a waste of both money and energy.

Our team has worked the past years on improving those load-balancers, developing multiple techniques to allow extremely fast load-balancing at the entrance of datacentres without breaking connections, the main challenge of building a load-balancer [Cheetah, NSDI'20]."

"In the past years, data centres have grown like mushrooms. They are gigantic warehouses full of servers that run today's Internet services, like YouTube, Google, Facebook or Netflix."

What impact can this have on society?

"These new techniques enable to lower the over-provision of data centres, which is the number of servers running for nothing "just in case", but without overloading them. This is an important metric as the worldwide data centres energy consumption continues to grow, and will soon reach twice the whole electric consumption of Sweden. And, of course, if the service providers pay less money to run fewer computers, your bills might be lower too!"

"These new techniques enable to lower the over-provision of data centres, which is the number of servers running for nothing "just in case", but without overloading them."

How does it work?

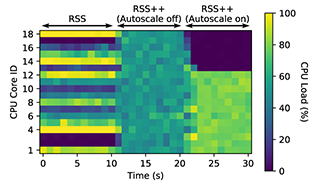

"With the RSS++ algorithm [CoNEXT'19], our team took a first real glimpse into the problem of load balancing inside a server, that is ensuring all CPU (processor) cores get the same amount of load. Load-balancing inside a server is usually done using RSS. RSS hashes packets in the network card to select a CPU core. The problem with hashing is that it is not perfect. With a real packet trace taken at our campus, we saw that sometimes a core receives three times more packets than another core.

So in RSS++, we use an optimisation algorithm to tweak the hashing mechanism of RSS and directly send an even amount of load to all the cores. This leads, in practice, to a scheduler that is network-driven."

Can you explain more about the challenges and your solutions?

"A data centre is composed of many servers. So the problem of load-balancing between servers is exactly the same and is quite bad when using hashing. However, this time, transferring anything between servers, even the tiny bit of state RSS++ does is very hard - and slow. Applying RSS++ on servers themselves will not be practical. So instead, we use something better than hashing, that would ensure that packets of the same microflows (i.e. connections to a web server like a user browsing Facebook) always end up on the same server.

In Cheetah, we proposed to push the "memory" to the packet itself. The client host is forced to write down what we called a cookie, to the network header. Then, when the load balancer receives such marked packet, it only has to read in the cookie in the packet header itself to find where it should go. We do this in TCP by abusing timestamps, so it works with the current solution. But QUIC, the new protocol taking over the Internet, comes with a much cleaner solution built-in, the connection ids."

What are you planning for 2021/in the future?

"At the end of 2020, we presented a poster of our latest work, called CrossRSS [CoNEXT'20]. CrossRSS is a load-balancer (of course, it runs Cheetah!) that spreads the load uniformly even inside the servers but directly from the load-balancer.

Now, we're going to improve upon CrossRSS to predict future loads and re-organise the Internet traffic to achieve maximal efficiency, utilising at most every CPU cycle to avoid energy waste, so stay tuned!"

Download picture, design of a load-aware, core-aware load-balancer (png 43 kB)

This work has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No. 770889). This work was also funded by the Swedish Foundation for Strategic Research (SSF) and the Wallenberg AI, Autonomous Systems and Software Program (WASP).